Profanity Detector

A machine learning project that detects profanity.

Machine Learning

My profanity detection system employs a Logistic Regression model for binary classification to predict whether a given tweet or comment contains profanity. Here’s a detailed breakdown of our machine learning approach:

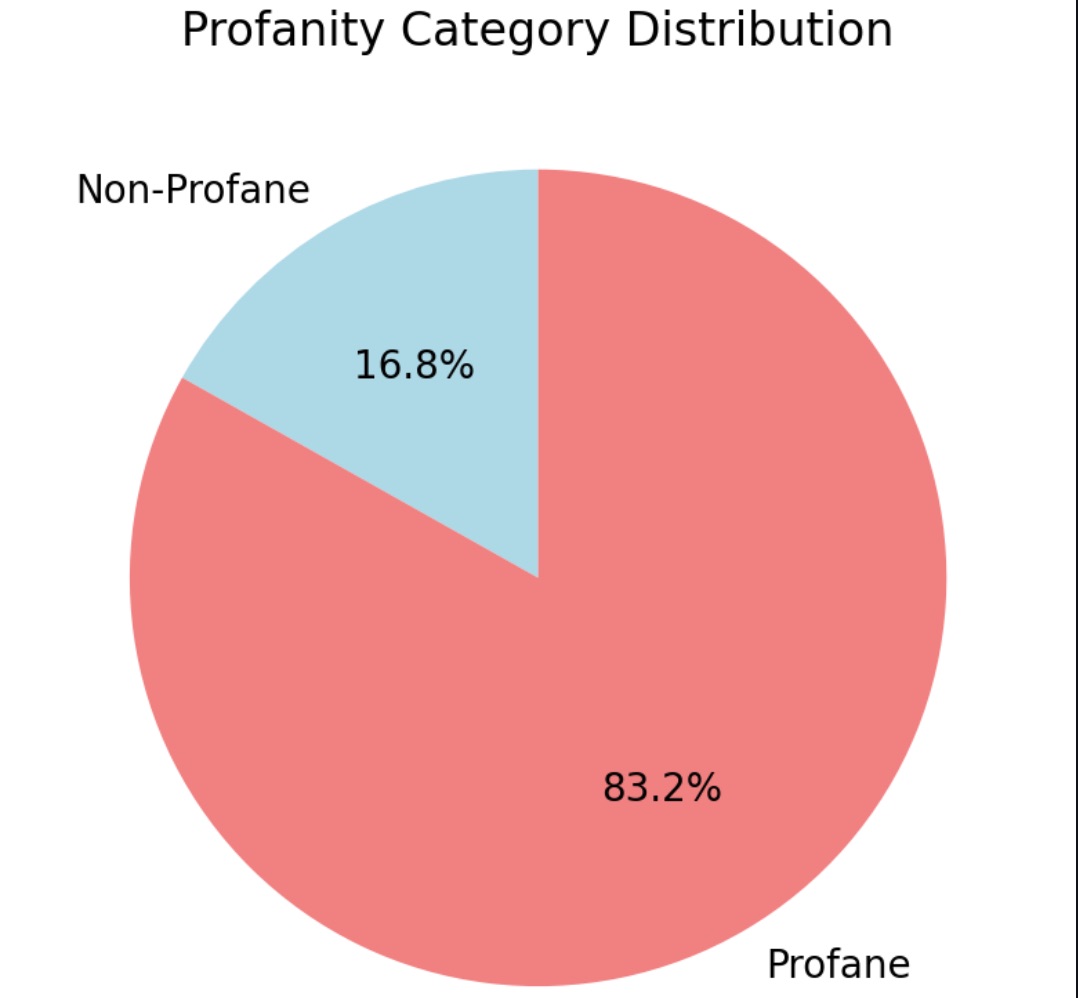

A pie chart showcases the percentage distribution of profane versus non-profane tweets in the test dataset. This visualization provides an immediate overview of the class balance in our data, highlighting any potential imbalances that might affect the model’s performance or interpretation of results.

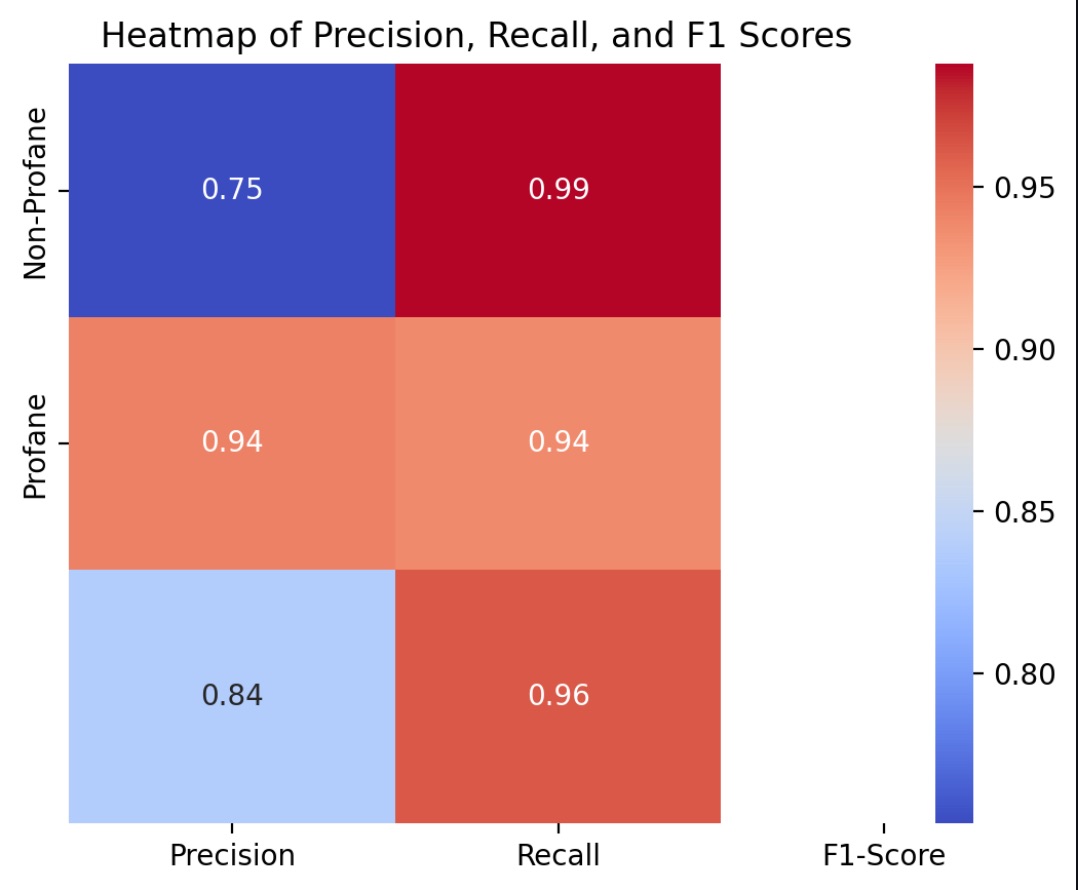

This heatmap visualizes the precision, recall, and F1-scores for both profane and non-profane classes. The color intensity represents the magnitude of each metric, allowing for quick comparison across different performance aspects of the model. This visualization is particularly useful for identifying areas where the model excels or needs improvement, facilitating targeted optimization efforts.

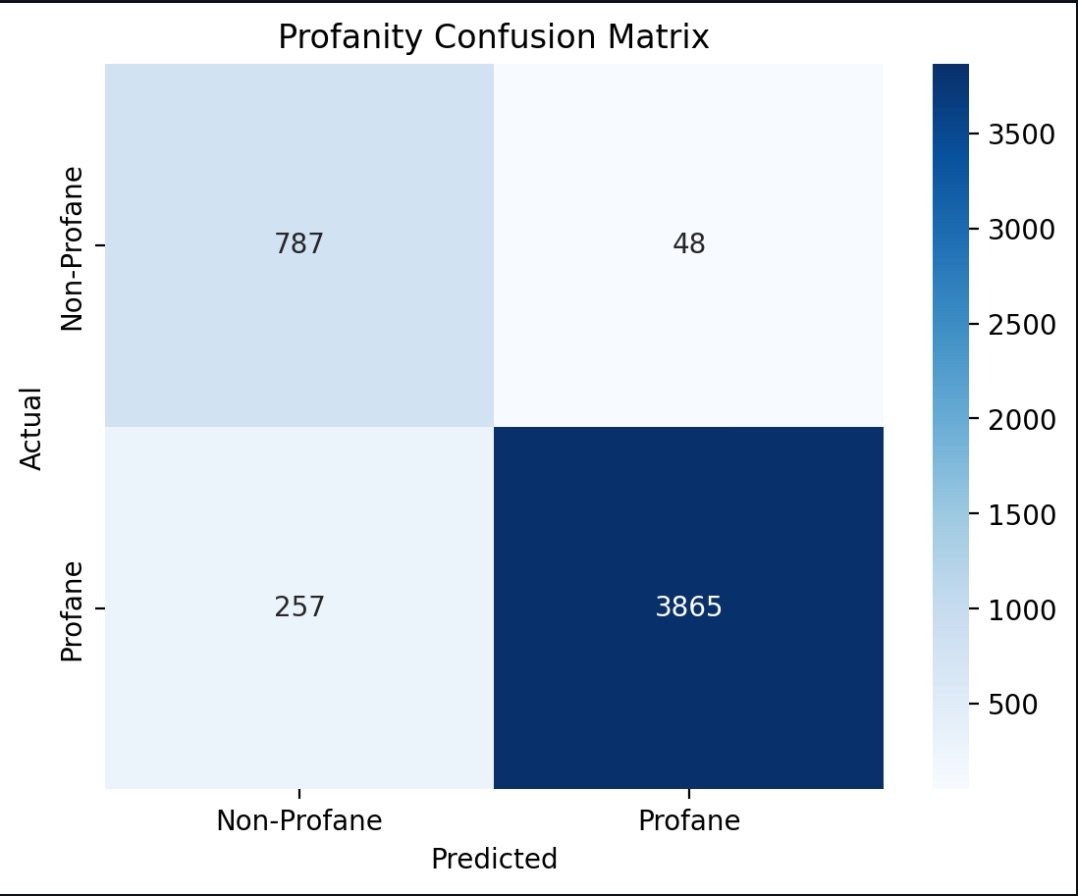

This heatmap displays the confusion matrix, illustrating the number of correct and incorrect predictions for both profane and non-profane tweets. The matrix is color-coded, with darker colors representing higher numbers of predictions. This visualization allows users to quickly assess the model’s overall accuracy and identify any significant patterns in misclassifications.

What: We chose Logistic Regression as our core algorithm. It’s a probabilistic classifier that excels in binary classification tasks. In our context, it predicts the probability of a text being profane based on its features. This algorithm is best suited for the task, considering factors such as accuracy, computational time, and scalability.

How: The development of our machine learning model involved several key steps:

- Feature Extraction: We converted the text data into TF-IDF vectors, limiting to 5,000 features. This process captures relevant word patterns and their importance across the dataset.

- Model Training: We trained the Logistic Regression model on a resampled dataset after applying SMOTE to handle class imbalance. We used default hyperparameters for initial training.

- Model Saving: Once trained, we saved the model and vectorizer using pickle, allowing for easy reuse in our Streamlit application.

Why: We selected Logistic Regression for several compelling reasons:

- Efficiency: It provides quick predictions, making it suitable for real-time applications like content moderation.

- Simplicity and Interpretability: The algorithm is relatively easy to understand and explain, which is crucial for transparency in content moderation systems.

- Probabilistic Output: Logistic Regression offers flexibility with probability thresholds. This allows for adjusting the strictness of the moderation policy as needed.

- Low Computational Cost: Both for training and real-time use, Logistic Regression is computationally inexpensive, making it ideal for deployment in various environments.

While more complex models like neural networks could potentially offer higher accuracy, we found that Logistic Regression provided a good balance between performance, interpretability, and computational efficiency for our specific use case.